Investigating a Darknet Site, the Hard Way

Background

I've spent some time looking at various darknet sites and trying to figure out legal ways to identify the IPv4 or IPv6 address of the server on which the site is hosted. I'm writing this up because many people have asked how I do it. This is part of a presentation and training I've given to various organizations over the past five years. Now expired NDAs allow me to publish.

Curiosity

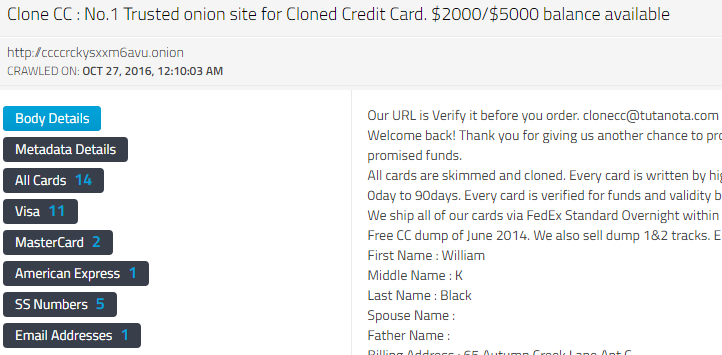

Let's say you stumble across a website at an onion address. For example, this carding site:

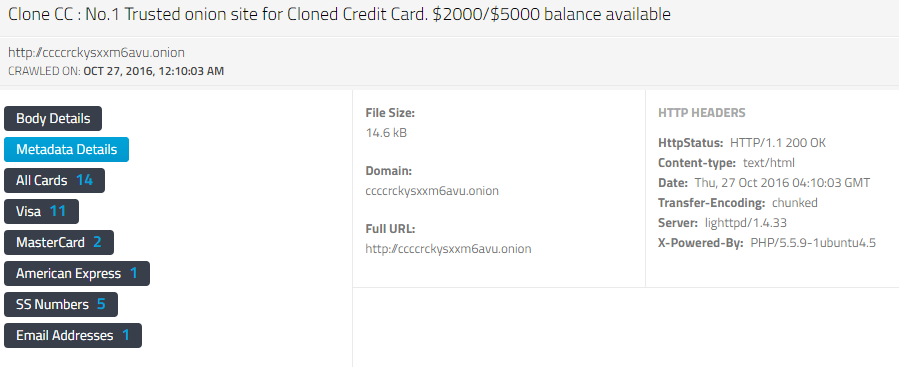

It's a fairly typical site hosting a forum for people to share and sell stolen credit card data. The software is pretty typical web forum software running on a web server of some kind. What kind? Well, you can fire up developer tools in your preferred browser and watch the conversation with the web server. This will give you a ton of information, but what we want to focus on here is Response Headers. These headers generally tell you about the server software and other details of the HTTP response. A potentially easier way to do this would be to put the site into BuiltWith and get a response, however BuiltWith doesn't support .onion sites. I cheated and used DarkOwl's engine, by clicking on the "metadata details" button in the snapshot of the site above.

The "HTTP HEADERS" section on the right gives you some clues.

Server: lighttpd/1.4.33

X-Powered-By: PHP/5.5.9-1ubuntu4.5

One Level Deeper

The "server" header should report the web server software used to serve up the page. In this case, the web server is Lighttpd with software version 1.4.33. Generally, this information is accurate. Of course, like any software, someone can change the response and lie, but that's pretty rare. What is becoming more common is to strip it down to the minimum, in this case, it could be just "lighttpd" without the version number appended to the response. If this was the case, you then have to do some more testing to figure out which software version of the web server is likely serving up the content.

The web server also helpfully provides the "X-Powered-By" custom entity, which is "PHP/5.5.9-1ubuntu4.5". Parsing this out, it's PHP version 5.5.9-1ubuntu4.5. PHP is a popular scripting language generally used for serving dynamic content on websites. The version number is a custom version provided by Ubuntu Linux. Again, this could be a false version, but generally, it's accurate.

Investigating

The next step is to take the software plus version number and determine how current is this software. The current version of Lighttpd web server software is 1.4.48 as of 11 November 2017. We now know the web server software is out of date. How far out of date? Well, 1.4.33 was released on 27 September 2013. Just shy of 4 years out of date. I bet there are a few remotely exploitable bugs and vulnerabilities which we could take advantage of -- if we wanted to possibly violate laws by breaking and entering into the server.

What about the PHP version? Well, it looks like it was released 29 October 2014 and has since had at least 15 CVEs since then. CSO Online has a pretty good overview of what is a CVE and why one would care about them. Similar to the web server, it seems this "application server" is about 3 years out of date. I bet there are a few remotely exploitable bugs and vulnerabilities which we could take advantage of -- if we wanted to possibly violate laws by breaking and entering into the server.

More Curiosity

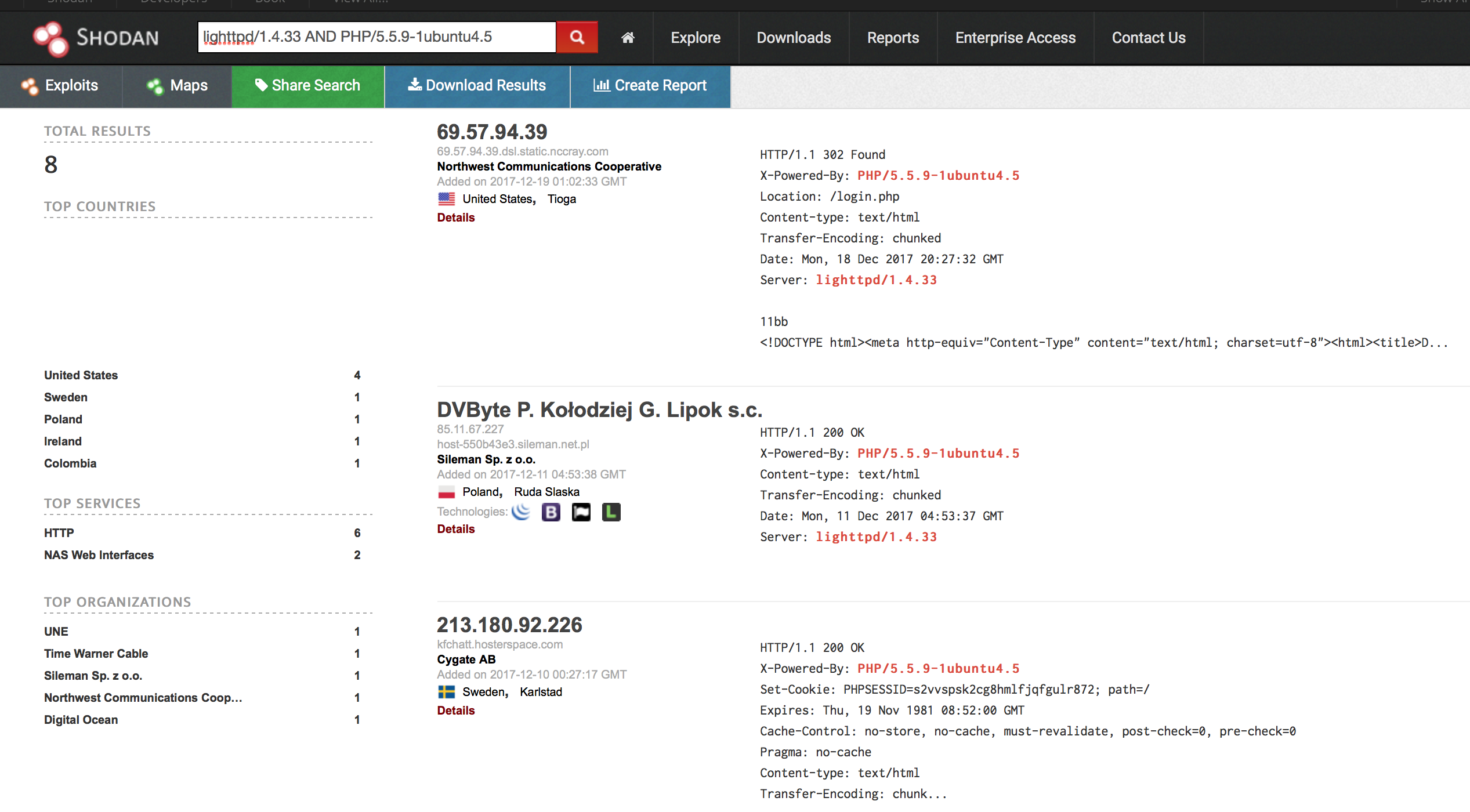

Since we're not about to break into a server, how else can we find this server? We need a service which has scanned wide swaths of the internet and recorded all these details. There are a few of these services, but I generally use Shodan. Here's an example search run at the time of the writing of this blog post.

It shows 8 servers with the combination of the lighttpd web server with version 1.4.33 and the PHP app server with version of 5.5.9-1ubuntu4.5. When I ran this search years ago, there were about 65 servers scattered around the world. My next step was to write a fairly simple script to connect to each IP address and make a HTTP GET request for the onion domain. One of the IP addresses responded with the exact same content as seen in the first picture above. Further, at the very bottom of that page is a PGP key, which also matched the content on the responding IP address. I used curl to do this, but you can also use wget or other tools.

After I had the main page matching, I did a sampling of some of the posts in the forum, and found what was hosted on the onion matched what was hosted on the IP address. This provided enough evidence that the onion site and IP address site were hosting the same content to reliably state that the onion was hosted at a single IP address.

Conclusion

What we've just walked through is a simple example of how to investigate a random darknet site. It's mostly curiosity and an analytic process to figure out what's hosted where. It's not always this easy or straightforward. In fact, most darknet sites are well hidden and not so easily mapped to the real world. Good luck and happy investigating!

Disclaimer

I originally started talking about this process in 2012 when I was CEO of the Tor Project. It was a more manual process then. In 2016, DarkOwl gave me access to their crawling engine, and based on audience feedback, the pictures are much easier to understand than the command line screenshots I was using for the prior 4 years. In April 2017, I joined DarkOwl. However, the process is the same and it's all fueled by the same curiosity.

Credits

- The staircase is a picture from the Saint James Club in Paris, France. Copyright 2017 Andrew Lewman.

- DarkOwl for the two screenshots of the .onion site.

- Shodan for the screenshot of the Server and X-Powered-By internet results.

- The people who encouraged me to publish this part of my presentation and training. Namely ML and SLC.