Thoughts on Crawling and Understanding the Darknet

Introduction

This post will attempt to define darknets as I understand them, and to walk through a couple of scenarios about how to crawl sites discovered in darknets. The goal of the crawling is to help develop demographics about what sort of content is available, and to build an index of the content for querying in a multitude of ways, perhaps such as through a search interface. There are a number of people working on these topics in some highly academic fashions, but the goal is to give a broad overview of some approaches using common tools available today.

Why darknets?

Darknets have been around for a decade or so. Some of the most well-known are from the Tor network; Silk Road, Wikileaks, Silk Road 2, StrongBox, and so on. For good or bad, Silk Road is what helped bring darknets to the masses.

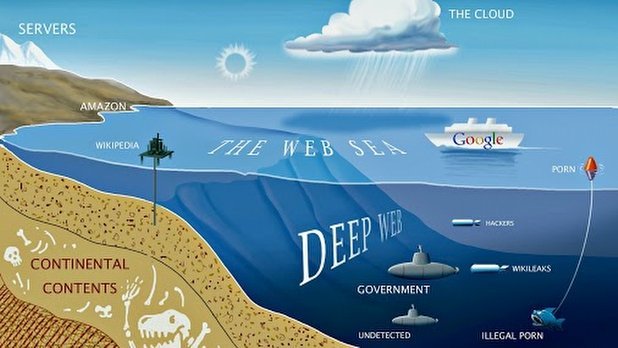

The current trend in information security is to try to build insight and intelligence into and from the underground or the darknet. Many companies are focused on the "darknet." The idea is to learn about what's below the surface, or near-future attacks or threats, before they affect the normal companies and people of the world. For example, an intelligence agency wants to learn about clandestine operations in its borders, or a financial company wants to learn about attacks on its services and customers before anyone else.

I'm defining the darknet as any services which requires special software to access the service, such as;

1. Tor's hidden services,

2. I2P,

3. FreeNet, and

4. GnuNet.

There are many more services out there, but in effect they all require special software to access content or services in their own address space.

Most darknet systems are really overlay networks on top of TCP/IP, or UDP/IP. The goal is to create a different addressing system than simply using IP addresses (of either v4 or v6 flavor).XMPP could also be considered an overlay network, but not a darknet, for example. XMPP shouldn't be considered a darknet because it relies heavily on public IPv4/IPv6 addressing to function. It's also trivial to learn detailed metadata about conversations from either watching an XMPP stream, or XMPP server.

The vastness of address spaces

Let's expand on address space. In the "clearnet" we have IP addresses of two flavors, IPv4 and IPv6. Most people are familiar with IPv4, the classic xxx.xxx.xxx.xxx address. IPv6 addresses are long in order to create a vast address space for the world to use, for say, the Internet of Things, or a few trillion devices all online at once. IPv6 is actually fun and fantastic, especially when paired with IPSec, but this is a topic for another post. IPv4 address space is 32-bit large, or roughly 4.3 billion addresses. IPv6 address space is 128-bits large, or trillions on trillions of addresses. There are some quirks to IPv4 which let us use more than 4.3 billion addresses, but the scale of the spaces is what we care about most. IPv6 is vastly larger. Overlay networks are built to create, or use, different properties of an address space. Rather than going to a global governing body and asking for a slice of the space to call your own, an overlay network can let you do that without a central authority, in general.

Defining darknets

There are other definitions or nomenclature for darknets, such as the deep web:

noun

1.

the portion of the Internet that is hidden from conventional search engines, as by encryption; the aggregate of unindexed websites:

private databases and other unlinked content on the deep web.

Basically, the content you won't find on Google, Bing, or Yahoo no matter how advanced your search prowess.

How big is the darknet?

No one knows how large is the darknet. By definition, it's not easy to find services or content. However, there are a number of people working to figure out the scope, size, and to further classify content found on it. There are a few amateur sites trying to index various darknets; such as Ahmia, and others only reachable with darknet software. There are some researchers working on the topic as well, see Dr. Owen's video presentation, Tor: Hidden Services and Deanonymisation. A public example is DARPA MEMEX. Their open catalog of tools is a fine starting point.

What I'd like to see is a more broad survey of all alternate Internet services (tor, i2p, freenet, gnunet, etc). I keep hoping DARPA's MEMEX project would do this, but nothing published as of yet. A few three-letter agencies around the world are indeed crawling all possible services, but under classified conditions so the results are unlikely to be published for the public.At one point, I ran a YaCy node crawling I2P and Tor, which simply started with a directory of sites, and crawled throughout. It found and indexed the content on a few hundred sites, but the unreliability of the sites (at the time) left much to be desired. There was also the issue of all the questionable image content it indexed, and I didn't want this data tied to me. Let's run a thought experiment about how to do this at scale.

Operationally Crawling the Darknet

Darknet address spaces are large. The smaller spaces are 80-bit large, meaning 1.2x10^24 possible addresses. However, in the real world, Ahmia has found around 4200 Tor hidden services with links to one another in some fashion. Project Artemis has started to crawl a number as well. However, again it only focused on Tor hidden services. Think of the need to crawl trillions of trillions of addresses to find all possible combinations, and then need to figure out what ports the services are available (such as http, https, etc). This is a Google or Bing-sized problem, except larger due to the vastness of the addressable space.

Brute Force Method

A few hundred thousand virtual machines crawling 7x24 over the span of a few years could crawl a significant portion of various darknets. Storing all of the data crawled, and then re-crawling the working addresses would likely require NSA, Google, or Bing-sized infrastructures. Some basic Bayesian algorithms could automate classifying the content found in the results. This would make a fantastic data stream to resell to infosec companies to help them understand the threats coming in the near future. I've met people working for three letter agencies who claim they are actually using this approach to find content. How to store all of this was the topic of a former blog post, The IT Manager View of NSA Spying. It would be a fantastic academic research project to see what's actually out on the darknet for content, regardless of the media's fascination with child abuse materials (which are also regularly found on the clearnet too).

Managing the crawl

The other challenge here is how to manage a few hundred thousand virtual machines. Tools like Chef and Puppet are just two of the many ways to manage the operating system level of the virtual machines. Even Amazon Web Services (AWS) doesn't make this scale of systems management very easy. There will need to be a coordination system or systems to manage which machine is crawling which portion of the address space. And then there are the indexers, the correlators, the databases, etc.

Apache Hadoop is probably the best starting point. IBM, Hitachi, and Google all have some thoughts on how to manage and profit from an infrastructure such as this. The Google example shows that they process a petabyte of information on 8,000 computers. In the world of big data, at this scale, you need to manage thousands of systems as one system. This is exactly what Chef and Puppet are designed to do. This keeps your sysadmin costs affordable. With virtual networking between the virtual machines, you can keep your network administration costs low. However, if you're building out your own cluster of hardware, on which to run these few hundred thousand virtual machines, you're going to be building out an infrastructure the size of Google, Bing, or Amazon.

A future post could explore the costs involved with hosting all of this on Amazon, Microsoft Azure, and/or building out the entire system yourself. The bigger question is to what business end? In either case, we're talking a scale of millions of dollars per month. Is the content on the darknet worth that much cost? Do we think the darknet is large enough with enough quality content to develop the insight to justify this cost? My gut says it isn't, but this solely based on my experience at Tor and having seen the small number of sites available; relative to the vastness of clearnet sites. However, much like exploring other solar systems in outer space, it's not clear anyone has found the entire set of darknet sites available.

Targeted Method

A more targeted method is to simply run a directory server for some darknet services, and crawl each site as it's announced or added to the global directory. For instance, there are hidden wiki's and eepsites dedicated to being handcrafted lists of darknet addresses. This is a fine seed list with which to begin a crawl. This is probably in the tens of virtual machines, with terabytes of storage space for the resulting content indexes.

Concurrently, by modifying the open-source code of one of these systems, one could record every time an address was announced, and therefore automatically build a directory of available addresses. Once the list exists, one can then start to check each site for http/https access and crawl whatever content appears there. A more complete list may include the results of probes of common services on each address, to learn what's hosted at each address. I'm thinking of ssh, xmpp, http, https, smtp, and so on. Remember, we're not trying to identify the address owners, just what services and/or content are hosted at each address.

This is something which can be run within a few hundred or maybe thousand virtual machines. This is far easier to manage with tools like Apache Hadoop out of the box, so to speak. The I2P and Tor networks combined are still less than 10,000 nodes/servers. A small intelligence agency or firm could easily manage to run a decent percentage of the nodes and collect this data. With Apache Hadoop running the crawls, indexes, and managing the data, combined with puppet/chef for managing the underlying systems, this setup looks far more cost efficient with a far quicker ROI to gain intelligence and learn the topography of the darknets.

Summary

This concludes our short thought experiment. The idea here is to think about how to crawl the vast overlay network or darknet address space in order to find, index, and categorize the content and services discovered. Maybe someone wants to create a darknet insight or intelligence service and actually see what it takes to successfully crawl and index the vast unknown. The targeted method shows the most promise for a quicker ROI with far less capital and operational costs than the brute force method.

Hopefully your CISO or CTO could answer why you would want to know about the darknet, what all this means from an operational perspective, what insights and intelligence you could glean from such a project, and how you could profit from the project as well.