High Performance lewman.com, Part Two

In further thoughts about high performance websites, I thought about expanding on my own experiences with my side-hobby on my domain, lewman.com. I'm not talking about this blog, yet. The blog is hosted on Wordpress.com and I'll migrate it to my own server in time.

Content

Optimizing the server is one crucial part to a fast website. Another part is the content itself. Web sites are getting larger, here's just two links highlighting the issue:

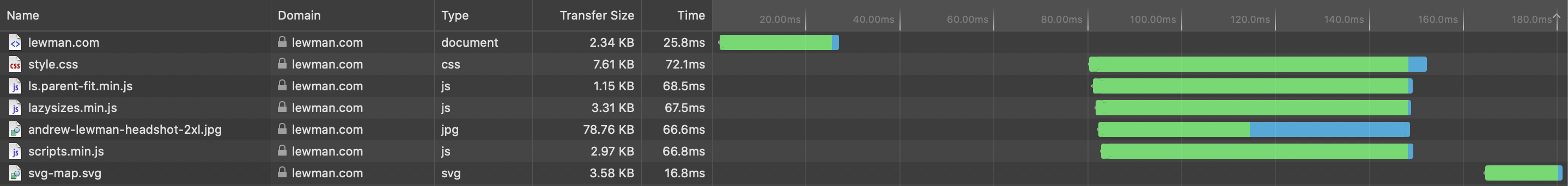

By including many 3rd party scripts, content, fonts, and just using somewhat automated tools to generate the page, we end up with lots of code for the browser to download and parse. Back to our image from Part 1 (that didn't start out as a multi-part set of posts) where I analyzed the download transfer times.

Now, let's focus on the "Transfer Size" column. Obviously, the larger the file, the longer it takes to download to your browser. The speed is dependent on your Internet connection. Whether it's fiber, wireless, mobile, etc all matters in how to perceive performance. I used Publii to create the current iteration of the site. For now, the theme I used and the code itself is minimized and efficient. I hope it remains this way as they continue to develop the system.

Over the past 22 years, I've used hand-coded HTML/CSS, PHP-Nuke, Wordpress, Jekyll, and written my own content management and publishing system. For the longest time, I just served up my .finger file. The fastest systems to serve content are the ones doing the least work. Static site generators are always the fastest as the web server doesn't have to wait on database connections or other latent means to retrieve content. It just has to serve up a file from disk or in memory cache.

Around the mid-2000s, I wrote my own system using a mix of bash shell and ruby all served with Jetty. The scripts took plain markdown files and converted them to html with pandoc from a simple directory structure. It wrote out everything to the live site directory on the web server. Jetty was very fast, much faster than Apache or anything else at the time. In experimenting with the various web servers, jetty was fast not because the code was inherently better, but because the Java Virtual Machine (JVM) was great at offering threaded requests and caching the data more efficiently than was the native FreeBSD or Linux kernel memory managers. This meant that with Jetty, the content was often already in memory and could be served without the latency of a disk request. To speed up the site, I'd run a crawler against my site to pre-load the site into memory cache to remove the slow disk drives from the equation.

At the time, I was taking lessons learned from running global websites at TechTarget and scaling it down to my personal site. There's a large difference in scale. TechTarget was serving tens of millions of page requests from a database per hour versus my personal site at hundreds of page requests per day-to equalize the timespan, tens of request per hour.

Over the years, the native web servers grew faster and faster and I didn't need Jetty for the performance gains.

Content Rendering

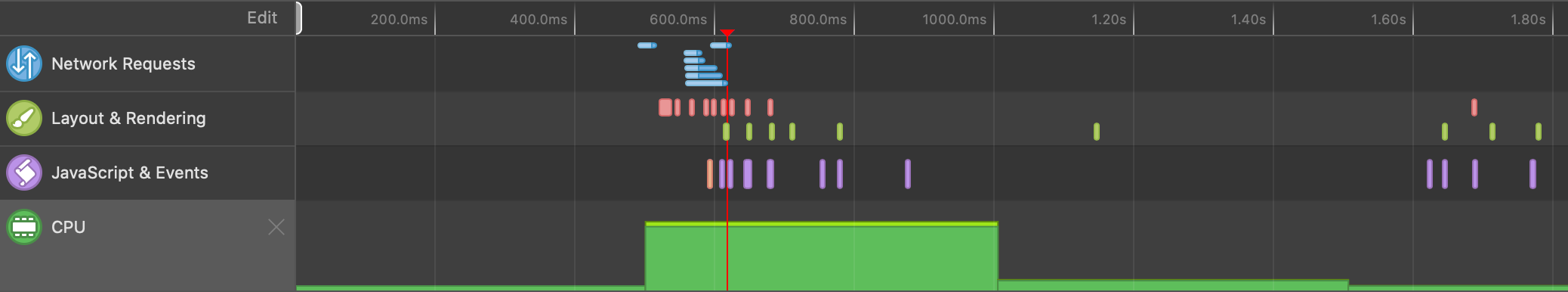

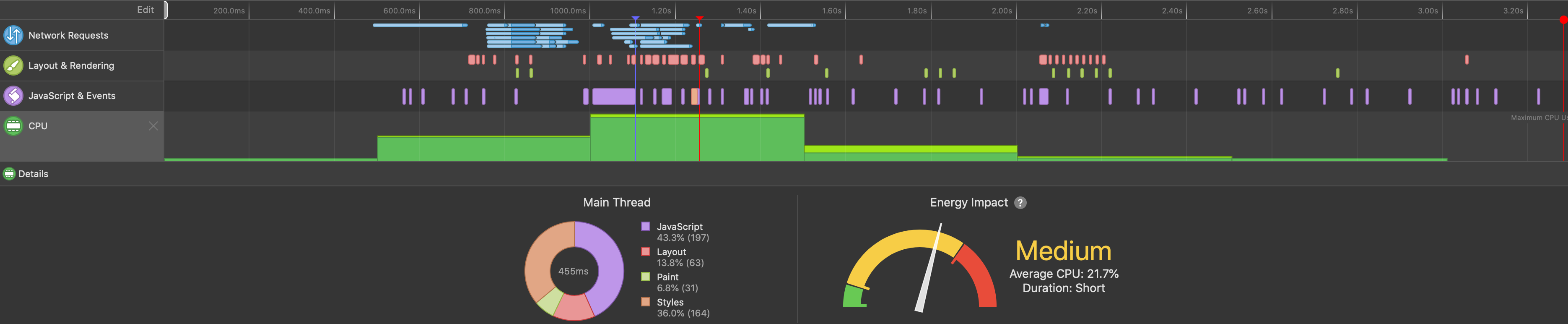

Most of the tools you need to optimize the content and code are built into your browser. Most browsers have a Web Inspector. It lets you see what's going on inside the browser and how various parts of the site interact with your system. You can profile the page load and see how much of the system resources your website requires.

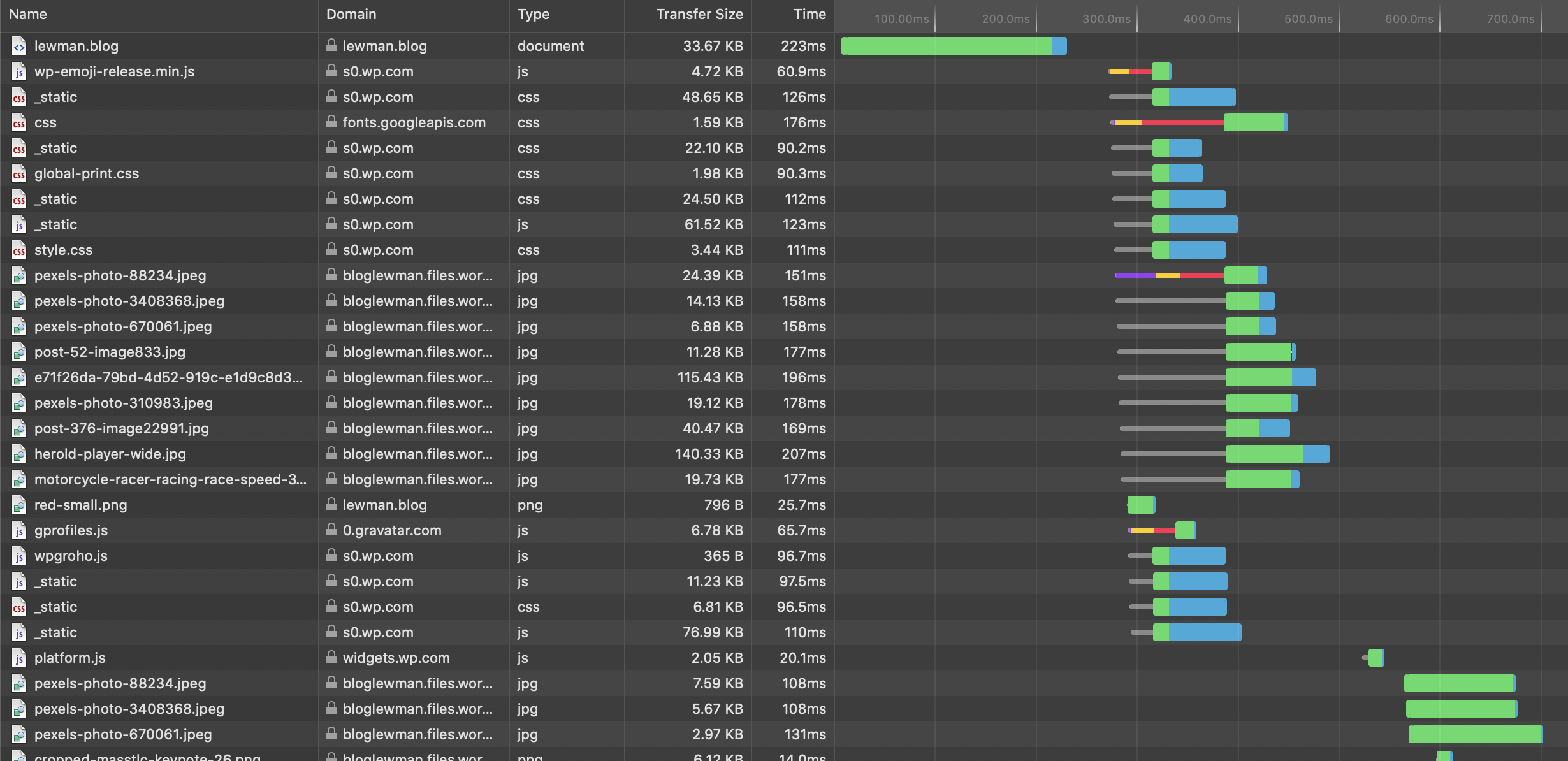

Above is a breakdown of a fresh load of my site without any cache. Running the same tools on the homepage of this blog shows this, again from a forced pull without using cache:

All of this time is spent waiting for the CDN to figure out where I am, which server has the content, and then finally serving up the content to me. Granted, it's still very fast at 208 ms, but still annoys me. The goal obviously, is that on average for popular sites, this latency is only for the first person in a location in a specific period of time. Everyone else near the first person benefits from the subsequent requests of the same content.

CDN Testing

When I was hosting some busy sites, I used CoralCDN for a while. As expected, it did speed up page delivery around the world, but that first request from a new location was a bear (as in it took many seconds to transfer the content to the local server and then serve up the content to the requester). In most cases, it was faster to hit the source site than use the Coral CDN. Coral really shined when the source site was down or blocked. Say someone blocked Lewman.com? Lewman.com.nyud.net worked great, every time.

Subsequently, I tested out mirrors of sites with various CDNs. There's a direct correlation between the speed of the site and usage. The faster the site, the more people use it.

Conclusion

Creating and optimizing very fast sites are a hobby of mine. It used to be my job, but sometimes it's not worth it to "pin the butterfly".