High Performance lewman.com

History

On February 5th, 1998, the lewman.com domain name was live on the Internet. For some reason, I've saved the original email from the registrar and hosting company. In fact, I'm pretty sure the domain was registered earlier, but I didn't save the Network Solutions email.

Staging Environment

A few years ago, I bought a brand new Raspberry Pi 3 B+ and used as a staging environment for the site. I still do this today. The goal is to have everything work and perform well on a raspberry pi. The assumption is that if it works well in this staging environment, it should work well in production on the live Internet. The goal is to make the site as fast as possible. What does this mean in practical steps?

The Technology

I used a static site generator called Jekyll. I wrote my own templates and eventually hired a designer to make a theme for me. The goal is not just fast to load from the server, but in the browser as well, on desktops, laptops, all the way to mobile phones and raspberry pi "desktops". This meant CSS and HTML only, no javascript, and no external dependencies (like jquery, google analytics, or 3rd party fonts).

I started off with the Apache web server, moved to Nginx, and finally used Lighttpd for a few years. In the past year, I moved to Caddy. As the configuration is much easier, the TLS layer is handled automatically, and "it just works". I experimented with cache expiry and tuning the TCP stack of the underlying operating system to make everything as fast as possible. The goal was not just a fast Time To First Byte, but a fast total time to display the page in your browser. I used Browsera to test my site in different browsers and note how different CSS and HTML elements were rendered by each browser. I believe the site should look and feel the same regardless of browser. And it should do so quickly.

Jekyll was great for years. Last month, I migrated to Publii. It's a static site generator, but it takes less time to modify the site, the themes, and the content than I would spend with Jekyll. I mostly look for technologies which "just work".

Performance

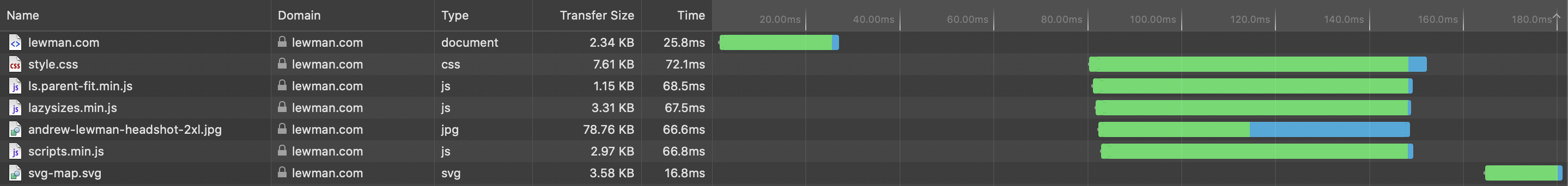

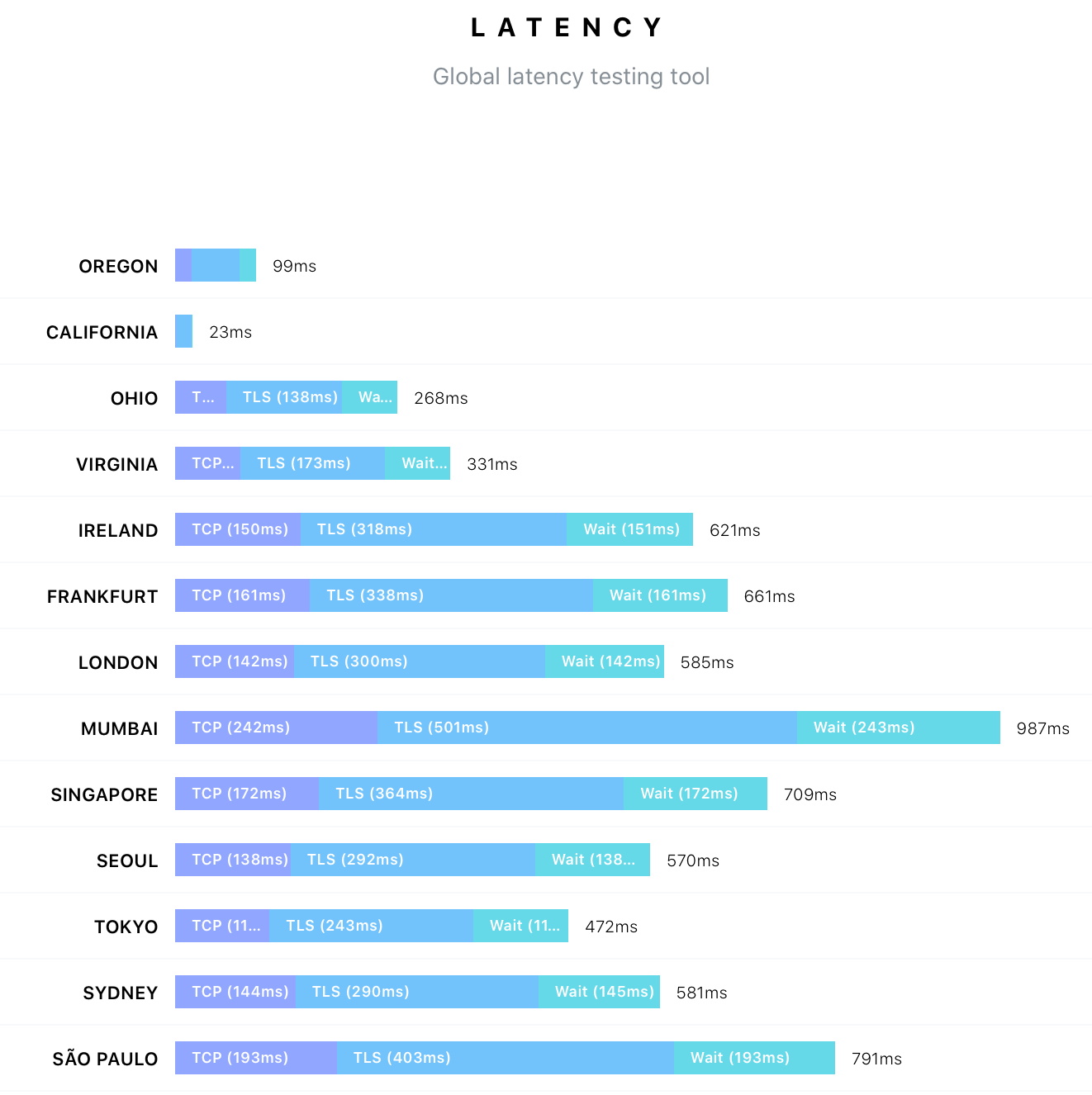

Currently, the total site loads in under 200 milliseconds--we'll come back to why this is a false measure. There are still waiting periods and lag I'm trying to reduce. However, I think there are just latencies I cannot control between my home internet connection and the server itself. Here's what I mean:

False Measures

I mentioned in the last paragraph that the loading in under 200 milliseconds was a false measure. Like many things, performance is relative. I'm in San Francisco and the server is across the Bay in Fremont. Of course it's fast.

Here the measurement of the site from the server itself:

curl -o /dev/null -w "Connect: %{time_connect} TTFB: %{time_starttransfer} Total time: %{time_total} \n" https://lewman.com

Connect: 0.452609 TTFB: 0.456200 Total time: 0.456411

Still super fast.

Here's the same test from various servers on the Internet:

Fremont, California:

Connect: 0.201873 TTFB: 0.506216 Total time: 0.506718

Dublin, Ireland:

Connect: 0.314001 TTFB: 0.713210 Total time: 0.713633

Chennai, India:

Connect: 1.738992 TTFB: 2.206990 Total time: 2.207473

If you're in India, the site loads in roughly 2.2 seconds. It's still fast, but not as fast as possible. Here's another set of testing results:

As you can see on BuiltWith, I've used a number of technologies over the years to make the site perform well and still be fully functional.

I've started experimenting with real peer-to-peer technologies for serving up the content, such as IPFS, ScuttleButt, and the like. However, they're experimental themselves and clearly not designed for performance. All of this is running on my development Raspberry Pi 3 B+, which sits next to my staging 3 B+ in the cluster rack under my desk.

Conclusion

There is no conclusion. Performance testing and optimization continues. But now you have a quick background on the site, some of it's history, and a glimpse into my three staged development environment. It's easy to replicate and maybe you'll have some suggestions or thoughts. Happy to learn more. Thanks for reading this far!