Ode to Apache Drill

How do you solve the petabyte logfile challenge?

'twas upon a midnight dreary,

sql queries running through eternity,

database errors making me weary.

Stumble upon drill by Apache,

bits and SQL galore,

data lakes draining with ease,

thanks to Drill's SQL core.

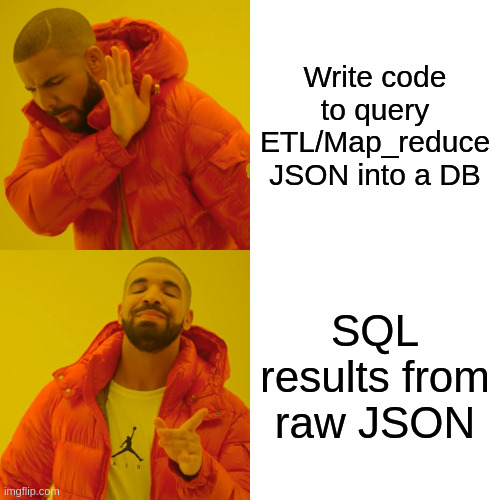

The normal process for querying data is basically this:

[ raw data ] --> ( Extract, Transform, Load and/or Map-Reduce process ) --> ( insert to database ) --> [ SQL/NoSQL query against the tables ] --> { results }

With Apache Drill, we have this:

[ raw data ] --> [ SQL query against the data ] --> { results }

There are some assumptions here, that the raw data is in some sort of semi-structured format already--such as CSV, syslog, JSON, or parquet. If not, then you have to add in some sort of ETL/MapR process on the raw data, preferably storing the result in parquet format.

Let's take some examples. This webserver stores the logfile in JSON format. Here's a single line from the log file:

{"level":"info","ts":1608664799.0497372,"logger":"http.log.access.log2","msg":"handled request","request":{"remote_addr":"73.71.4.138:62848","proto":"HTTP/2.0","method":"HEAD","host":"blog.lewman.com","uri":"/","headers":{"User-Agent":["curl/7.64.0"],"Accept":["*/*"]},"tls":{"resumed":false,"version":772,"cipher_suite":4865,"proto":"h2","proto_mutual":true,"server_name":"blog.lewman.com"}},"common_log":"73.71.4.138 - - [22/Dec/2020:19:19:59 +0000] \"HEAD / HTTP/2.0\" 200 0","duration":0.000131157,"size":0,"status":200,"resp_headers":{"Accept-Ranges":["bytes"],"Strict-Transport-Security":["max-age=31536000;"],"X-Content-Type-Options":["nosniff"],"X-Frame-Options":["DENY"],"Content-Type":["text/html; charset=utf-8"],"Last-Modified":["Thu, 17 Dec 2020 08:04:24 GMT"],"Server":["Caddy"],"X-Xss-Protection":["1; mode=block"],"Cache-Control":["max-age=31536000"],"Etag":["\"qlh53c9kr\""],"Content-Length":["12411"]}}

Ok, that's messy. Let's make it easier to read:

{ "level": "info", "ts": 1608664799.0497372, "logger": "http.log.access.log2", "msg": "handled request", "request": { "remote_addr": "73.71.4.138:62848", "proto": "HTTP/2.0", "method": "HEAD", "host": "blog.lewman.com", "uri": "/", "headers": { "User-Agent": [ "curl/7.64.0" ], "Accept": [ "*/*" ] }, "tls": { "resumed": false, "version": 772, "cipher_suite": 4865, "proto": "h2", "proto_mutual": true, "server_name": "blog.lewman.com" } }, "common_log": "73.71.4.138 - - [22/Dec/2020:19:19:59 +0000] \"HEAD / HTTP/2.0\" 200 0", "duration": 0.000131157, "size": 0, "status": 200, "resp_headers": { "Accept-Ranges": [ "bytes" ], "Strict-Transport-Security": [ "max-age=31536000;" ], "X-Content-Type-Options": [ "nosniff" ], "X-Frame-Options": [ "DENY" ], "Content-Type": [ "text/html; charset=utf-8" ], "Last-Modified": [ "Thu, 17 Dec 2020 08:04:24 GMT" ], "Server": [ "Caddy" ], "X-Xss-Protection": [ "1; mode=block" ], "Cache-Control": [ "max-age=31536000" ], "Etag": [ "\"qlh53c9kr\"" ], "Content-Length": [ "12411" ] }}

So, it should be pretty easy to query. Let's find which protocols are requested.

SELECT request['proto'] AS protocol, COUNT(request['proto']) AS requests FROM dfs.root.`caddy-access-log.json` GROUP BY 1 ORDER BY 2;

and the results:

+----------+----------+| protocol | requests |+----------+----------+| HTTP/1.0 | 17575 || HTTP/2.0 | 1044343 || HTTP/1.1 | 7247998 |+----------+----------+3 rows selected (151.594 seconds)

This query ran against the raw log file, there was no database, no ETL/MapR process, just the Drillbit SQL engine and results. It's not database fast, but compared to the end-to-end process (as shown above with ETL/MaP-R and so on) it is far faster. For a 20 GB log file of JSON on a slow system, this is quick.

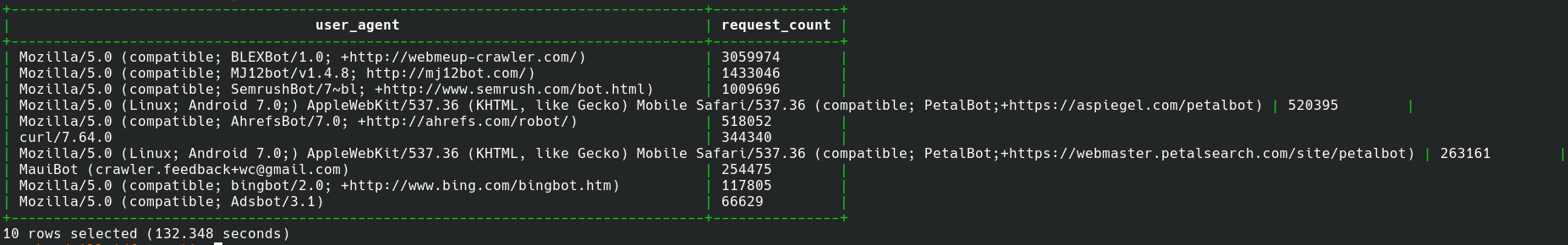

Let's tackle a more complex example. What are the top 10 user-agents in the logfile?

SELECT request['headers']['User-Agent'][0] AS user_agent, count(request['headers']['User-Agent'][0]) AS request_count FROM dfs.root.`caddy-access-log.json` GROUP BY 1 ORDER BY 2 DESC LIMIT 10;

and the results (as an image to fit on the page):

There you go, way more about Apache Drill than you ever wanted to know.

But wait, there's more!

How can we make these queries faster? Once again, Apache Drill has the answer. Take the data you'll eventually want and save it as a temporary table in parquet format.

Therefore, let's dump the raw data field we want into a parquet table in temporary storage:

CREATE TABLE user_agents AS SELECT request['headers']['User-Agent'][0] AS user_agent FROM dfs.root.`caddy-access-log.json`;

the results of the CREATE TABLE:

+----------+---------------------------+| Fragment | Number of records written |+----------+---------------------------+| 0_0 | 8309948 |+----------+---------------------------+1 row selected (150.975 seconds)

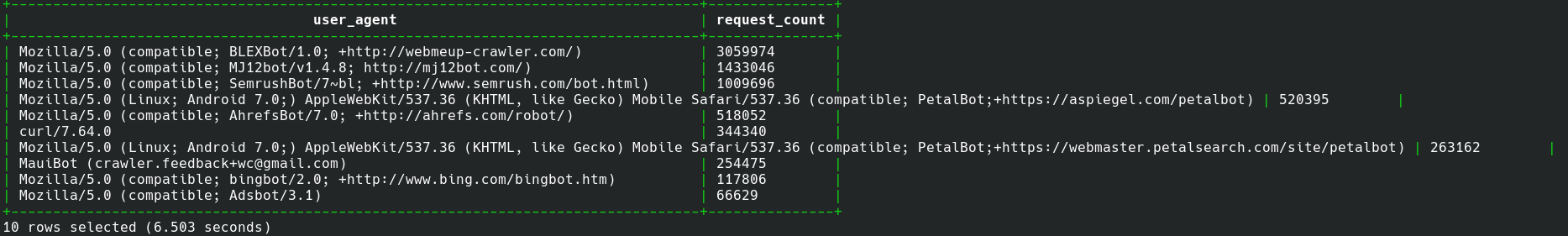

And then run a query:

SELECT user_agent, count(user_agent) AS request_count FROM dfs.tmp.`user_agents` GROUP BY 1 ORDER BY 2 DESC LIMIT 10;

and the results (again as an image to fit on the page):

Yes, that says we queried 8.3 million records in 6.5 seconds. Is that as fast as a traditional database? No. Is it vastly easier to use and get data? Yes!

But wait, there's EVEN MORE!

The raw JSON logfile is 20 GB. The parquet temp table is.....625 MB.

In summary, Apache Drill is awesome. I think it's the future of data lakes and data analysis as data volumes get larger and larger.