The Performance Difference with One GPU

The third installment in a series so far. Previously, and previously. I bought an AMD RX 9070XT with 16GB VRAM for roughly $800 with shipping. This is solely to run llama/ollama LLM models. It was relatively cheap, compared to Nvidia cards, that it's worth any increase in performance. Getting right to the point, the GPU is seven times faster than the CPU with the same conditions (same OS, same CPU, same ollama version, same model, same prompt). I ran the test 10 times on each system (CPU-only, GPU-only). Roughly $114/multiple speedup. A comparison table is below.

| Values | CPU-only (total duration in seconds) | GPU-Only (total duration in seconds) | Performance Delta |

|

Minimum |

105.426898402 |

10.653931784 | 9.9 |

|

Median |

115.635362649 |

16.864972407 | 6.9 |

|

Maximum |

183.043312778 |

28.414720596 | 6.4 |

|

Average: |

126.2855332733 |

17.1327122768 | 7.4 |

The test prompt is a simple fish shell for loop:

for run in (seq 10)

echo "what is the airspeed of an unladen swallow?" | ollama run qwen3:14b --verbose | grep eval

endThe results are that for the same prompt, same model, with the GPU we're waiting 17 seconds on average for the full response. While 17 seconds still isn't instantaneous, for the money it's vastly cheaper than renting a GPU or buying a higher-end "enterprise" GPU. I'm curious if I pair two GPUs together if I can use them as one logical GPU with the load spread across both cards.

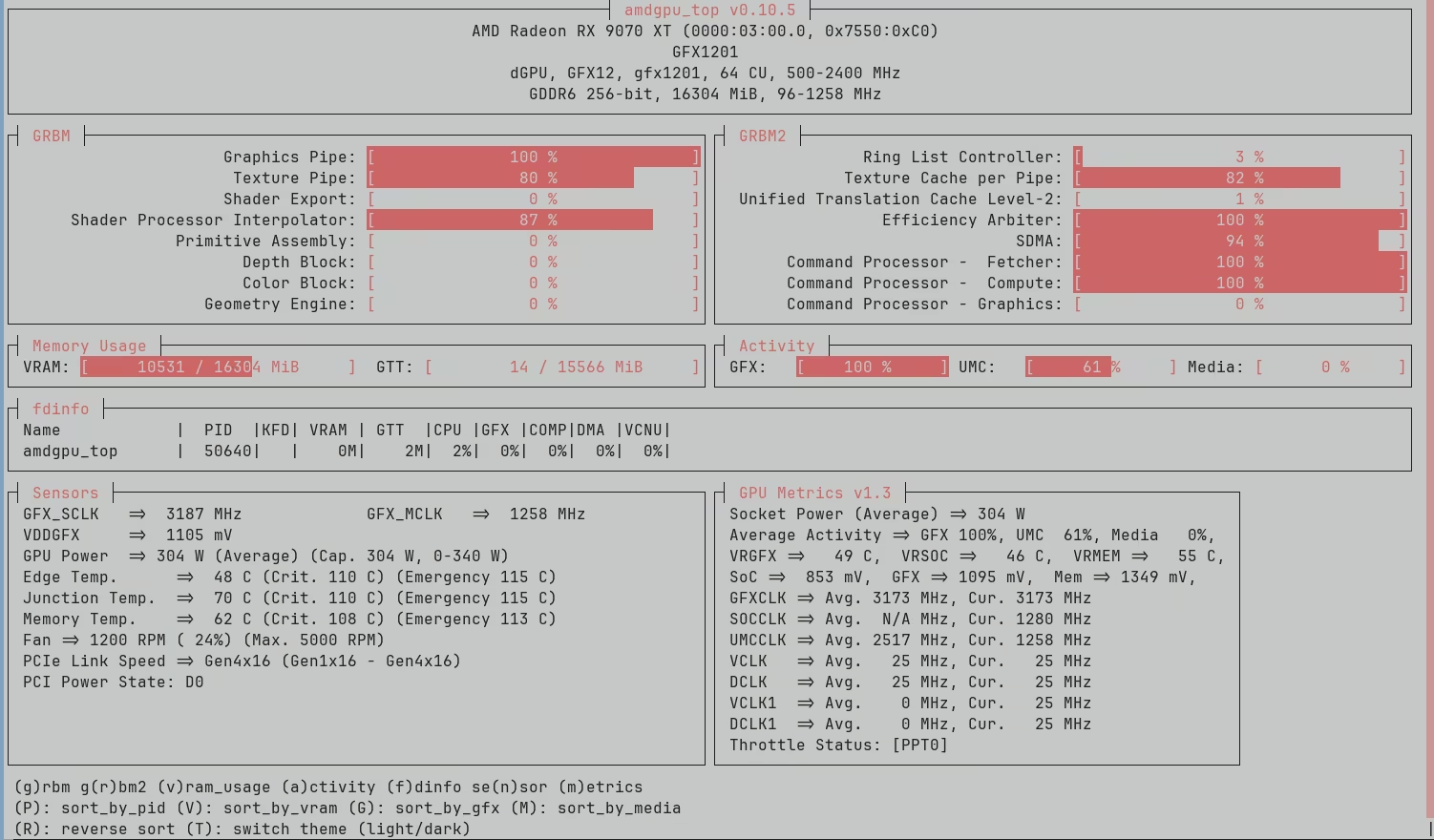

I don't know why there is such variance in the results. The system was idle, other than running the prompt. The LLM model was loaded into ram before the start of the tests. Unless I hit some thermal limit on the GPU (I didn't see this while watching amdgpu_top), not sure why the fastest GPU result was 9.9x faster than CPU.

This is what amdgpu_top shows when the prompt is running:

The full test results are CPU-only and GPU-only as CSV files.