Blog Stats Through 2023

This blog is generated by Publii, which is a pile of JavaScript around leveldb and SQLite databases. All of the content and everything that makes up the blog is in the SQLite database. In theory, it should be easy to get some stats out of the database.

I started writing a script in Julia to generate stats and pretty graphs, but then realized maybe some stochastic pigeon could help this post along with raw SQL queries. Four hours later, I have functional SQL queries that do what I want. In the four hours it took the pigeon to figure out functional SQL and SQLite specific SQL, I could've written everything myself. However, so be it. These stats are through 2023, which does not include this post.

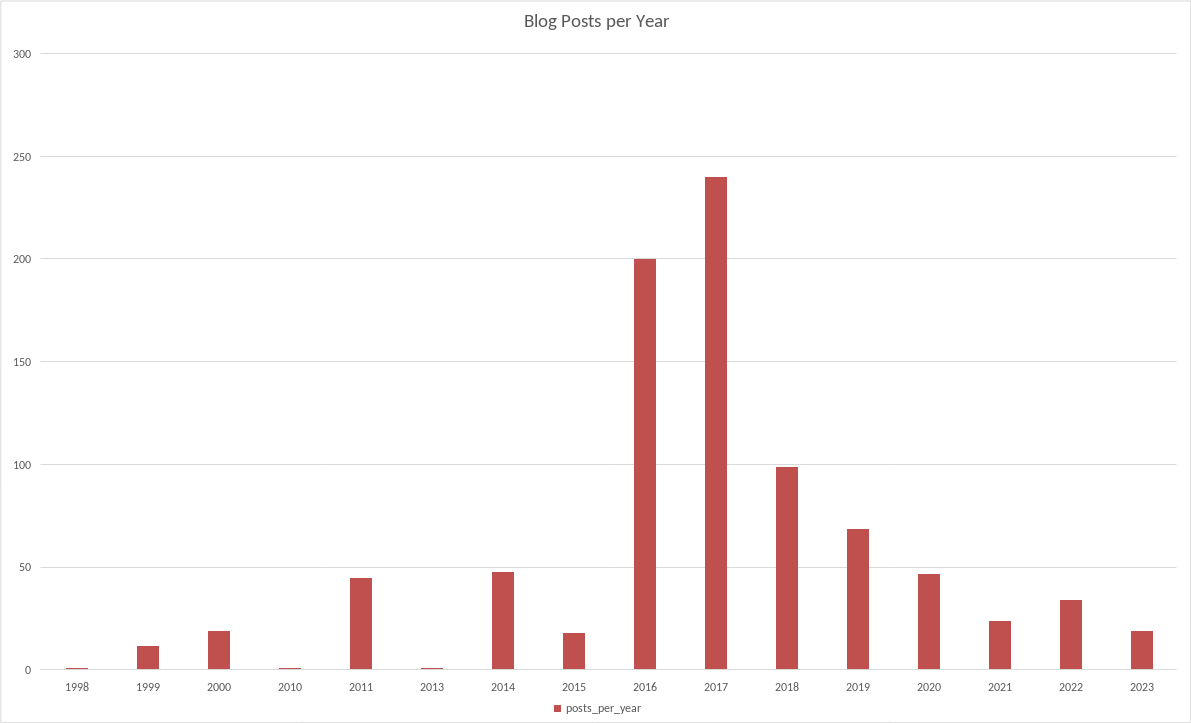

Blog Posts per Year

There are 885 posts from 1998-12-04T22:42:00 through 2023-12-08T07:15:20.731. Yes, we're getting very precise down to the milliseconds a post is created. In normal speak, 1998 to 2023. The SQL for this table is:

SELECT

strftime('%Y', datetime((created_at/1000), 'unixepoch')) AS year,

COUNT(*) AS posts_per_year

FROM posts

GROUP BY year;year posts_per_year

1998 1

1999 12

2000 19

2010 1

2011 45

2013 1

2014 48

2015 18

2016 200

2017 240

2018 99

2019 69

2020 47

2021 24

2022 34

2023 19

The first thing I notice is I'm missing 2001 through 2010. There's one post in 2010, but seems odd I only posted once in 9 years. I think these missing years are when I migrated to a different domain. Internet Archive Wayback Machine has them, I just have to figure out how to pull them out or find them in some archive. I'm sure an LLM/stochastic pigeon can figure it out pretty easily </sarcasm>. I do have the original posts in plaintext file format buried in archives. I'll figure out how to automate pulling apart the various tarballs and importing the content into Publii. It seems I switched domains, content systems, but kept my sanity in keeping everything in plaintext files throughout it all.

Blog Posts per Month per Year

The SQL:

SELECT

strftime('%Y', datetime((created_at/1000), 'unixepoch')) AS year,

strftime('%m', datetime((created_at/1000), 'unixepoch')) AS month,

COUNT(*) AS posts_per_month

FROM posts

GROUP BY year, month;year month posts_per_month

1998 12 1

1999 1 7

1999 2 3

1999 4 2

2000 3 1

2000 4 4

2000 5 1

2000 6 4

2000 9 8

2000 10 1

2010 3 1

2011 5 5

2011 6 6

2011 7 4

2011 8 6

2011 9 4

2011 10 5

2011 11 7

2011 12 8

2013 8 1

2014 1 5

2014 2 1

2014 3 7

2014 4 3

2014 5 4

2014 6 4

2014 7 3

2014 8 9

2014 9 4

2014 10 2

2014 11 5

2014 12 1

2015 1 3

2015 3 2

2015 4 4

2015 5 2

2015 6 3

2015 9 2

2015 11 1

2015 12 1

2016 1 1

2016 2 4

2016 3 1

2016 4 1

2016 5 28

2016 6 21

2016 7 18

2016 8 11

2016 9 22

2016 10 36

2016 11 29

2016 12 28

2017 1 21

2017 2 11

2017 3 17

2017 4 16

2017 5 40

2017 6 14

2017 7 35

2017 8 25

2017 9 15

2017 10 22

2017 11 10

2017 12 14

2018 1 13

2018 2 8

2018 3 4

2018 4 8

2018 5 5

2018 6 6

2018 7 6

2018 8 9

2018 9 8

2018 10 14

2018 11 8

2018 12 10

2019 1 6

2019 2 3

2019 3 5

2019 4 5

2019 5 6

2019 6 8

2019 7 6

2019 8 4

2019 9 9

2019 10 10

2019 11 2

2019 12 5

2020 1 6

2020 2 6

2020 3 6

2020 4 3

2020 5 4

2020 6 3

2020 7 4

2020 8 2

2020 9 2

2020 10 3

2020 11 3

2020 12 5

2021 1 3

2021 2 3

2021 3 1

2021 4 1

2021 6 2

2021 7 3

2021 8 2

2021 9 1

2021 10 4

2021 11 2

2021 12 2

2022 1 1

2022 2 1

2022 3 1

2022 4 3

2022 5 2

2022 6 5

2022 8 2

2022 9 6

2022 10 2

2022 11 5

2022 12 6

2023 1 1

2023 2 1

2023 3 2

2023 4 1

2023 5 1

2023 6 4

2023 7 2

2023 8 1

2023 9 2

2023 10 2

2023 12 2

Did I really post 40 times in a month in May of 2017? Turns out I did because those are from my trial of Google Plus. For those reading this and not knowing what was Google Plus, Wikipedia has you covered. This blog has outlived so many corporate "social networks", "blog rings", etc. It'll probably outlive Google, Twitter/X, Facebook, etc.

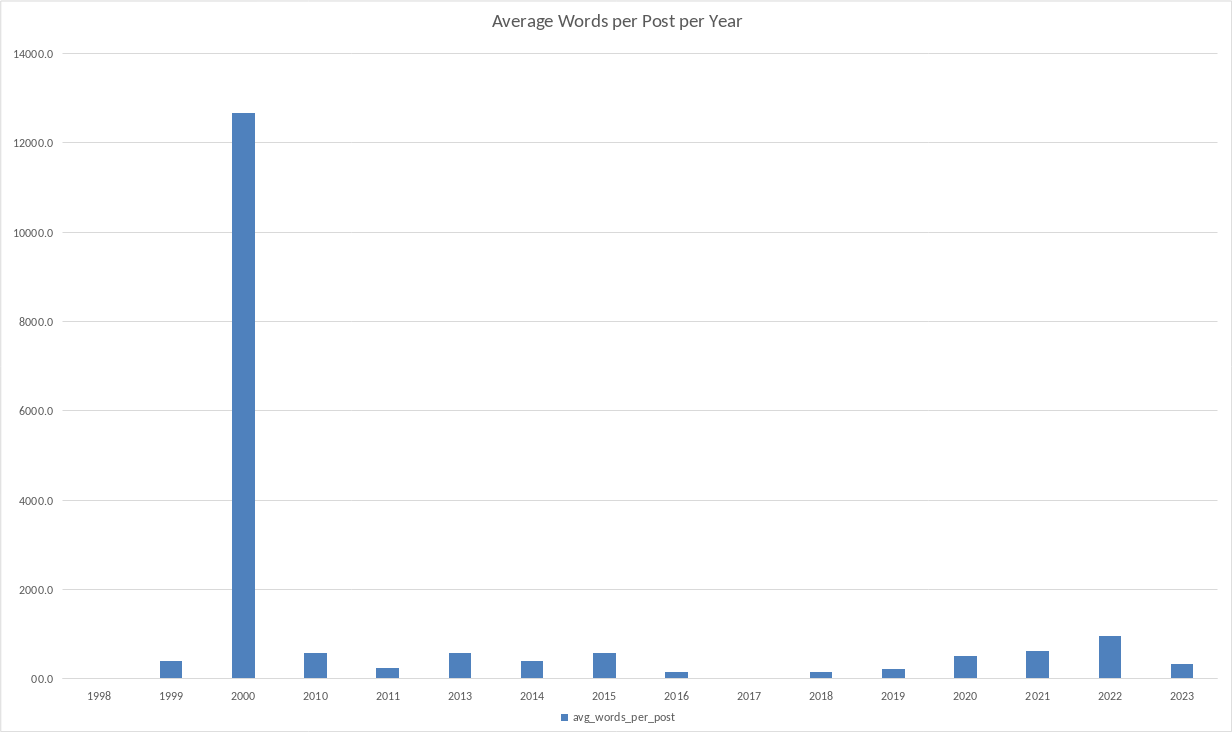

Average Words per Post per Year

The SQL:

SELECT

strftime('%Y', datetime(created_at/1000, 'unixepoch')) AS year,

ROUND(AVG((LENGTH(text) - LENGTH(REPLACE(text, ' ', ''))) + 1)) AS avg_words_per_post

FROM posts

GROUP BY year; year avg_words_per_post

1998 14.0

1999 419.0

2000 12673.0

2010 591.0

2011 250.0

2013 595.0

2014 407.0

2015 593.0

2016 162.0

2017 38.0

2018 165.0

2019 221.0

2020 525.0

2021 633.0

2022 972.0

2023 342.0

The year 2000 stands out as an outlier. Otherwise, it seems to ebb and flow with my free time. However, something stands out in the SQL to me. It's not really counting words, it's counting average length of text between spaces in the content. There are actual libraries to do this accurately, so I should re-work this to more accurately reflect what I wanted.

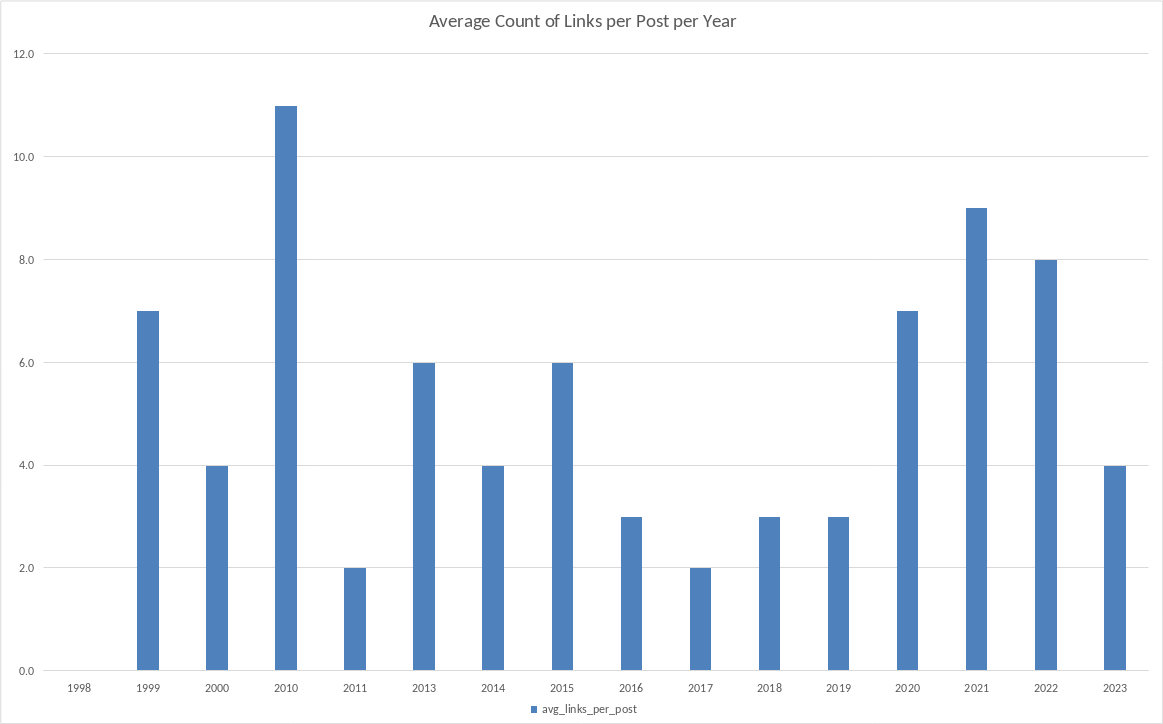

Average Number of Links per Post per Year

The SQL:

SELECT

strftime('%Y', datetime(created_at/1000, 'unixepoch')) AS year,

ROUND(AVG((LENGTH(text) - LENGTH(REPLACE(text, 'http', ''))) / LENGTH('http'))) AS avg_links_per_post

FROM posts

GROUP BY year;year avg_links_per_post

1998 0.0

1999 7.0

2000 4.0

2010 11.0

2011 2.0

2013 6.0

2014 4.0

2015 6.0

2016 3.0

2017 2.0

2018 3.0

2019 3.0

2020 7.0

2021 9.0

2022 8.0

2023 4.0

The Google Plus years of short posting, which are generally 1 story or 1 image in a link drags down the average for those years.

I have a few more personal projects to work on, and then I can re-run these SQL queries (and fix them in some cases) to better reflect on the past 25 years of the blog.